In a groundbreaking development, Meta’s AI research team, in partnership with the Basque Center on Cognition, Brain, and Language, has unveiled an artificial intelligence model capable of decoding human thoughts into text. This is done with up to 80% accuracy. This advancement holds significant promise for individuals who have lost the ability to speak. This is offering a potential avenue for restored communication. So, lets find out can AI read your mind?

Non-Invasive Brain Activity Decoding

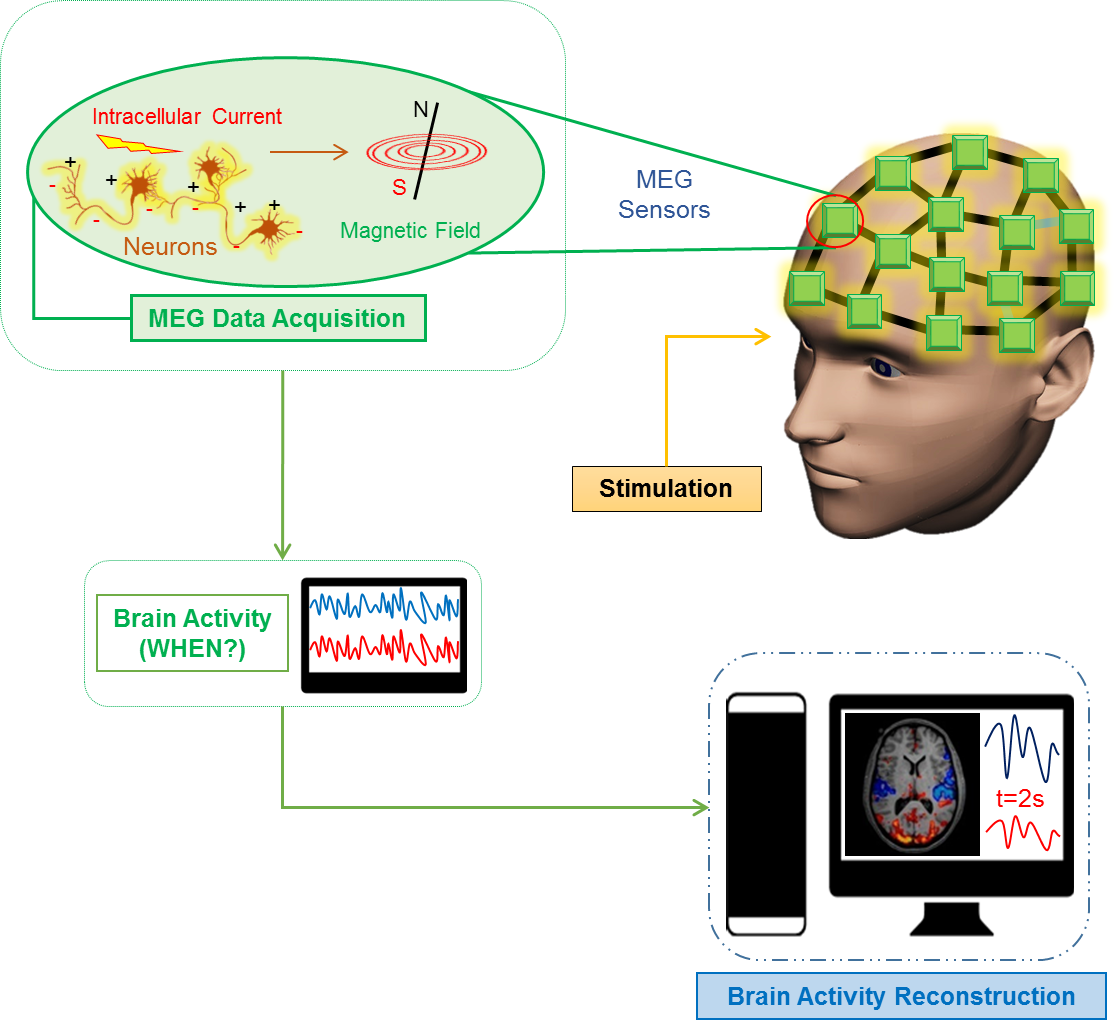

Traditional brain-computer interfaces often necessitate invasive surgical implants to monitor neural activity. Contrastingly, Meta’s approach employs non-invasive techniques such as magnetoencephalography (MEG) and electroencephalography (EEG). This captures brain activity without the need for surgery. In their study, the AI model was trained using brain recordings from 35 volunteers as they were typing sentences. When introduced to new sentences, the model demonstrated the ability to predict up to 80% of the characters typed based on MEG data. This is a performance at least twice as effective as that achieved with EEG-based decoding.

You might also like : The 10 Best AI Apps of 2025!

Understanding the Mechanism

MEG involves measuring the magnetic fields produced by neural activity, requiring a magnetically shielded environment and necessitating that participants remain still to ensure accurate readings. While this method offers high precision, its practicality outside of controlled laboratory settings is currently limited. Additionally, the technology has primarily been tested on healthy individuals, leaving its efficacy in those with neurological impairments yet to be fully explored.

Insights into Language Formation

Beyond translating thoughts into text, Meta’s AI model provides valuable insights into the brain’s process of converting ideas into language. By analyzing MEG recordings, researchers can observe brain activity on a millisecond scale, revealing the transformation of abstract thoughts into words, syllables, and even the specific finger movements involved in typing. A notable discovery is the brain’s use of a ‘dynamic neural code,’ a mechanism that links various stages of language formation while retaining previous information. This finding sheds light on how individuals fluidly construct sentences during speech or typing.

Future Implications and Ongoing Research

While this research marks a significant step toward non-invasive brain-computer interfaces, several challenges remain before practical applications can be realized. Enhancing decoding accuracy and addressing the logistical constraints of MEG technology are critical areas for future development. To advance this field, Meta has announced a $2.2 million donation to the Rothschild Foundation Hospital to support ongoing studies. Collaborations with institutions such as NeuroSpin, Inria, and CNRS in Europe are also underway. It is aiming to refine and expand upon these pioneering efforts.

This research underscores the potential of AI to revolutionize communication methods, particularly for those unable to speak. This also offers profound insights into the intricate processes of human language formation.